Experts in autocracies have pointed out that it is, unfortunately, easy to slip into normalizing the tyrant, hence it is important to hang on to outrage. These incidents which seem to call for the efforts of the Greek Furies (Erinyes) to come and deal with them will, I hope, help with that. As a reminder, though no one really knows how many there were supposed to be, the three names we have are Alecto, Megaera, and Tisiphone. These roughly translate as “unceasing,” “grudging,” and “vengeful destruction.”

I have a number of articles saved regarding how white supremacy thinks, when it increases, how it expresses itself, and so on. I hope to get to all of them eventually, but in looking them over, that this one stood out as being more related to “what can we do about it” than the others. A couple are about preventing it, but it’s a little late for that now, and a little early for future generations. So let’s look at one potential solution and see how effective it is – or isn’t.

================================================================

Does ‘deplatforming’ work to curb hate speech and calls for violence? 3 experts in online communications weigh in

AP Photo/Tali Arbel

Jeremy Blackburn, Binghamton University, State University of New York; Robert W. Gehl, Louisiana Tech University, and Ugochukwu Etudo, University of Connecticut

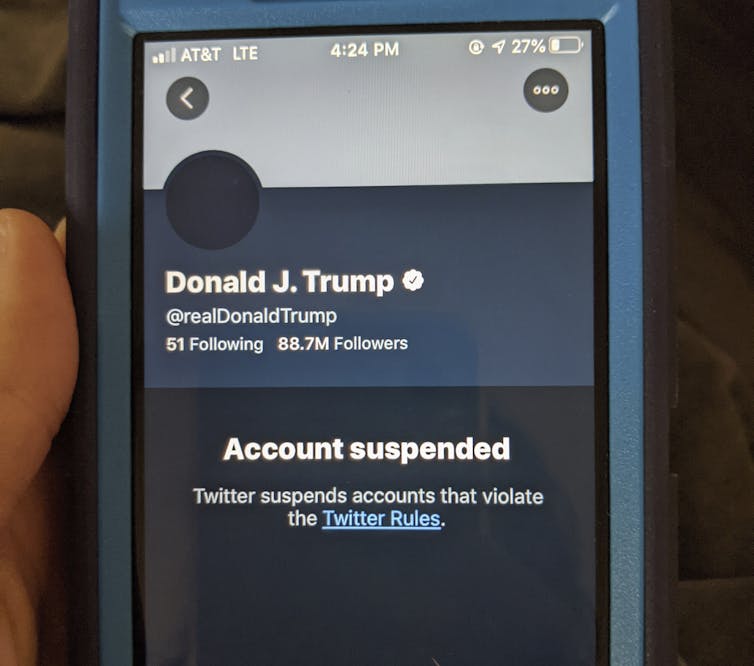

In the wake of the assault on the U.S. Capitol on Jan. 6, Twitter permanently suspended Donald Trump’s personal account, and Google, Apple and Amazon shunned Parler, which at least temporarily shut down the social media platform favored by the far right.

Dubbed “deplatforming,” these actions restrict the ability of individuals and communities to communicate with each other and the public. Deplatforming raises ethical and legal questions, but foremost is the question of whether it’s an effective strategy to reduce hate speech and calls for violence on social media.

The Conversation U.S. asked three experts in online communications whether deplatforming works and what happens when technology companies attempt it.

Sort of, but it’s not a long-term solution

Jeremy Blackburn, assistant professor of computer science, Binghamton University

The question of how effective deplatforming is can be looked at from two different angles: Does it work from a technical standpoint, and does it have an effect on worrisome communities themselves?

Does deplatforming work from a technical perspective?

Gab was the first “major” platform subject to deplatforming efforts, first with removal from app stores and, after the Tree of Life shooting, the withdrawal of cloud infrastructure providers, domain name providers and other Web-related services. Before the shooting, my colleagues and I showed in a study that Gab was an alt-right echo chamber with worrisome trends of hateful content. Although Gab was deplatformed, it managed to survive by shifting to decentralized technologies and has shown a degree of innovation – for example, developing the moderation-circumventing Dissenter browser.

From a technical perspective, deplatforming just makes things a bit harder. Amazon’s cloud services make it easy to manage computing infrastructure but are ultimately built on open source technologies available to anyone. A deplatformed company or people sympathetic to it could build their own hosting infrastructure. The research community has also built censorship-resistant tools that, if all else fails, harmful online communities can use to persist.

Does deplatforming have an effect on worrisome communities themselves?

Whether or not deplatforming has a social effect is a nuanced question just now beginning to be addressed by the research community. There is evidence that a platform banning communities and content – for example, QAnon or certain politicians – can have a positive effect. Platform banning can reduce growth of new users over time, and there is less content produced overall. On the other hand, migrations do happen, and this is often a response to real world events – for example, a deplatformed personality who migrates to a new platform can trigger an influx of new users.

Another consequence of deplatforming can be users in the migrated community showing signs of becoming more radicalized over time. While Reddit or Twitter might improve with the loss of problematic users, deplatforming can have unintended consequences that can accelerate the problematic behavior that led to deplatforming in the first place.

Ultimately, it’s unlikely that deplatforming, while certainly easy to implement and effective to some extent, will be a long-term solution in and of itself. Moving forward, effective approaches will need to take into account the complicated technological and social consequences of addressing the root problem of extremist and violent Web communities.

Yes, but driving people into the shadows can be risky

Ugochukwu Etudo, assistant professor of operations and information management, University of Connecticut

Does the deplatforming of prominent figures and movement leaders who command large followings online work? That depends on the criteria for the success of the policy intervention. If it means punishing the target of the deplatforming so they pay some price, then without a doubt it works. For example, right-wing provocateur Milo Yiannopoulos was banned from Twitter in 2016 and Facebook in 2019, and subsequently complained about financial hardship.

If it means dampening the odds of undesirable social outcomes and unrest, then in the short term, yes. But it is not at all certain in the long term. In the short term, deplatforming serves as a shock or disorienting perturbation to a network of people who are being influenced by the target of the deplatforming. This disorientation can weaken the movement, at least initially.

However, there is a risk that deplatforming can delegitimize authoritative sources of information in the eyes of a movement’s followers, and remaining adherents can become even more ardent. Movement leaders can reframe deplatforming as censorship and further proof of a mainstream bias.

There is reason to be concerned about the possibility that driving people who engage in harmful online behavior into the shadows further entrenches them in online environments that affirm their biases. Far-right groups and personalities have established a considerable presence on privacy-focused online platforms, including the messaging platform Telegram. This migration is concerning because researchers have known for some time that complete online anonymity is associated with increased harmful behavior online.

In deplatforming policymaking, among other considerations, there should be an emphasis on justice, harm reduction and rehabilitation. Policy objectives should be defined transparently and with reasonable expectations in order to avoid some of these negative unintended consequences.

Yes, but the process needs to be transparent and democratic

Robert Gehl, associate professor of communication and media studies, Louisiana Tech University

Deplatforming not only works, I believe it needs to be built into the system. Social media should have mechanisms by which racist, fascist, misogynist or transphobic speakers are removed, where misinformation is removed, and where there is no way to pay to have your messages amplified. And the decision to deplatform someone should be decided as close to democratically as is possible, rather than in some closed boardroom or opaque content moderation committee like Facebook’s “Supreme Court.”

In other words, the answer is alternative social media like Mastodon. As a federated system, Mastodon is specifically designed to give users and administrators the ability to mute, block or even remove not just misbehaving users but entire parts of the network.

For example, despite fears that the alt-right network Gab would somehow take over the Mastodon federation, Mastodon administrators quickly marginalized Gab. The same thing is happening as I write with new racist and misogynistic networks forming to fill the potential void left by Parler. And Mastodon nodes have also prevented spam and advertising from spreading across the network.

Moreover, the decision to block parts of the network aren’t made in secret. They’re done by local administrators, who announce their decisions publicly and are answerable to the members of their node in the network. I’m on scholar.social, an academic-oriented Mastodon node, and if I don’t like a decision the local administrator makes, I can contact the administrator directly and discuss it. There are other distributed social media system, as well, including Diaspora and Twister.

The danger of mainstream, corporate social media is that it was built to do exactly the opposite of what alternatives like Mastodon do: grow at all costs, including the cost of harming democratic deliberation. It’s not just cute cats that draw attention but conspiracy theories, misinformation and the stoking of bigotry. Corporate social media tolerates these things as long as they’re profitable – and, it turns out, that tolerance has lasted far too long.

[Deep knowledge, daily. Sign up for The Conversation’s newsletter.]![]()

Jeremy Blackburn, Assistant Professor of Computer Science, Binghamton University, State University of New York; Robert W. Gehl, F. Jay Taylor Endowed Research Chair of Communication, Louisiana Tech University, and Ugochukwu Etudo, Assistant Professor of Operations and Information Management, University of Connecticut

This article is republished from The Conversation under a Creative Commons license. Read the original article.

================================================================

Alecto, Megaera, and Tisiphone, there you have it. The clear consensu answer is “yes, but.” I am certainly intrigued by the type of social network described by Professor Gehl, but it does appear to require participants to accept facts and be willing to enter into rational discourse, which kind of eliminates the categories of people who need deplatforming the most. I don’t know what the solution actually is. It probably involves getting social media platforms not to function as profit-making activities, and good luck with that. But at least this is a start.

The Furies and I will be back.

8 Responses to “Everyday Erinyes #251”

Sorry, the comment form is closed at this time.

Thanks JD. Republicans would love to ust deplatforming to prevent truth tellers from spreading the truth, and I would object vociferously. Here’s the difference. Free speech is an absolute human right but only insofar as its exercise does not interfere with the human rights of others, a Republican specialty! Hugs!

Thanks Joanne– the different perspectives provide considerable illumination and hope that regulatory strategies are possible to address the issue without depriving people of free speech (free of harm to others),

Well, it’d be nice if it did curb hate and violence. But those would just be bonuses: Not having to be exposed to Trump’s Tweets is treat enough!

Hate speech, incitement of violence and all that is related to that are behaviour, i.e. the end-result of thought processes. While behaviour can be modified relatively easily and effectively by deplatforming which in turn can affect both the behaviour and the thought processes of followers because they no longer have their role model who spurs them on to copy the behaviour or try to surpass it.

However, the thought processes that are at the base of that behaviour do not change and those strongly driven by them will look for other behavioural outlets. There is no simple solution for that. Simply prohibiting expression doesn’t help, education can help but is mostly preventative and thus for the young. Chinese Clockwork-Orange-like re-education methods are inhumane. Improving social conditions may help to change the minds of some groups. But how to take away the fear and then hate that drives so many privileged and spoilt white people? More love, attention and patience seems wasted on them.

I watched “Those Winter Sundays” presentation by Theater of War (now available on YouTube). It was all based on one sonnet -one fourteen line poem – read three times, by three interpreters – Bill Murray, moses Ingram, and Joe Biden – with a half hour of discussion after each reading. The second and third discussions naturally included comparisons of the different readings. One thing that came was that, at some times in one’s life particular, but it could be at any moment – one of “Love’s austere and lonely offices” is to receive what someone else is giving you as part of his or her office. It’s not that I didn’t know that, but I had never heard it expressed in quite that way.

It isn’t enough for us to learn to give. We also need to learn to receive. Without that, one’s emotional growth can be as stunted as that of someone who doesn’t learn to give. Perhaps that partially explains why the things you mention are wasted on them.

The keyword is ‘receive’ here. You are right, I think those privileged spoilt white ‘brats’ have only learned to take, to get whatever they want, but never learned how to truly receive. As you say, many of them perhaps never have been given true love and understanding, which left them stunted in their emotional growth and unable to receive.

thanks